Gallery

Click on images to see their generation prompts below

Interactive Editing Results

Abstract

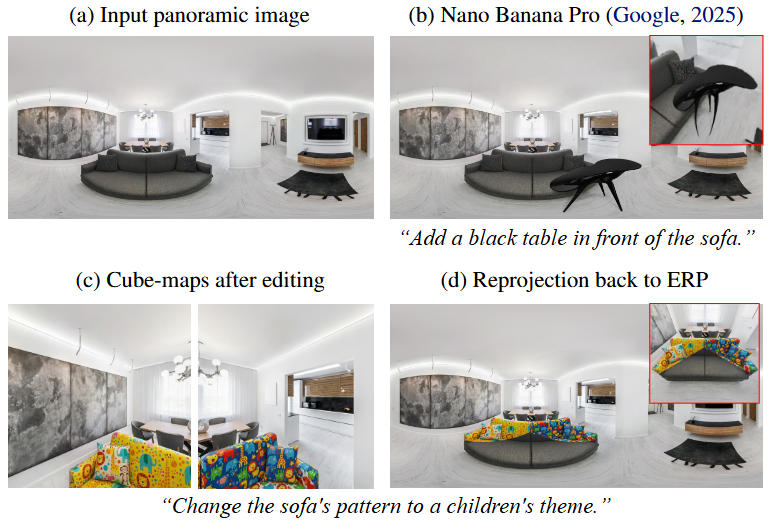

Editing panoramic images is crucial for creating realistic 360° visual experiences, yet existing perspective-based image editing methods fail to model the spatial structure of panoramas. Conventional cube-map decompositions attempt to bypass this issue but inevitably break global consistency due to their mismatch with spherical geometry.

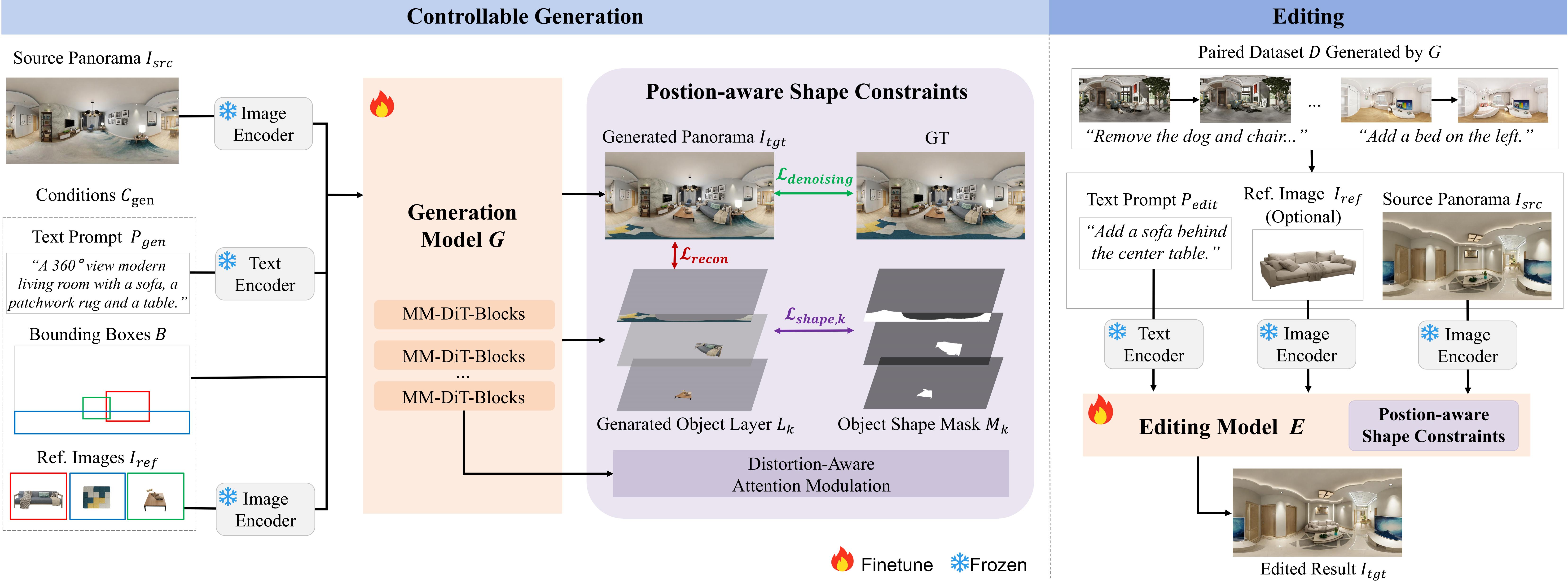

Motivated by this insight, we reformulate panoramic editing directly in the ERP domain and present World-Shaper (World-Shaper), a unified geometry‑aware framework for panoramic image editing.

To overcome the scarcity of paired data, we adopt a novel generate‑then‑edit paradigm, in which a controllable generation model synthesized panoramic pairs for supervised editing learning.

To address geometric distortion, we design a geometry‑aware learning strategy that enables distortion‑adaptive reasoning and consistent manipulation across latitudes through spatially adaptive supervision and progressive curriculum training.

Extensive experiments on our new benchmark PEBench demonstrate that World‑Shaper achieves superior geometric consistency, editing fidelity, and text controllability compared to state‑of‑the‑art methods, enabling coherent and flexible 360° visual world creation.

Motivation

The Challenge: Perspective Editing on Panoramas

Editing panoramas using perspective tools leads to:

- Loss of spherical layout and global consistency

- Artifacts across cube-map boundaries

- Inconsistent attributes across latitudes

- Difficulty preserving geometry-aware semantics

Our Insight: ERP-aware Editing

Operate directly in the equirectangular (ERP) domain with geometry-aware supervision to:

- Maintain spherical layout and continuity

- Enable distortion-adaptive reasoning

- Unify editing with consistent latitudinal behavior

- Bridge generation and editing quality for 360° scenes

Approach

Overview. We propose a unified pipeline that performs panoramic editing directly in the ERP domain, enabling geometry-consistent reasoning and manipulation.

Training. We adopt a generate‑then‑edit paradigm for data, combined with geometry‑aware learning strategies including distortion‑adaptive objectives and spatially adaptive supervision.

Results

Qualitative comparison with four top-performing SOTA methods on panorama editing. White boxes indicate the selected regions for visualization. The corresponding edited areas are shown in the perspective view within the red boxes.

Applications

Our method supports diverse applications.

3D World Generation

Users can begin by generating a panorama from either a text prompt or a local-view input image. A pre-trained depth estimation method is then applied to obtain the corresponding depth map of the panorama. Using this depth information, the 2D pixels are lifted into 3D points, and a sequence of camera poses is defined. The panorama is then rendered along these camera trajectories, and our method is employed to inpaint any missing regions in the rendered views. Finally, a panoramic Gaussian Splatting (GS) representation is optimized using the inpainted panorama frames.

Indoor Design

Users fetch a desired piece of furniture from a catalog and specify a location in the room; our method then seamlessly integrates the object into the panoramic scene, adapting to the spherical geometry and lighting conditions for a photorealistic visualization.

Video Demo

3D World Generation visualization: the left column shows the panorama image, and the right column shows the reconstructed 3D world video.